Grasp

Supporting people with building a personalized machine learning model

#Web Design #HCI Research #Machine LearningType

Capstone Project (Research Track)

Duration

2019.02 - 2019.10 (8 months)

Team

Yeonji Kim, Kyeongyeon Lee

Tools

Figma (UI Design)

Excel, Python (Data Analysis)

My Role

I was responsible for user research and design of a tool used in the study, collaborating with another researcher who focused on implementing the tool.

📂 Literature Review : Literatures on Interactive Machine Learning

🖍️ UX/UI Design : User flows and UI design of the tool

📋 User Study Methodology : Interview scripts, guidelines, tutorials, and metrics

👥 User Study : User experiments with 21 of 31 participants

📊 Data Analysis : Qualitative & quantitative data analysis

📄 Thesis : Introduction, Related Work, User Study, Findings, Conclusion

Award & Recognition

3rd Place in Ewha Capstone Design Expo

Published in the International Journal of Advanced Smart Convergence (first author) 🔗 Link

Overview of the Project

"How might we make the process of creating a machine learning model easier and more understandable for people without prior knowledge?"

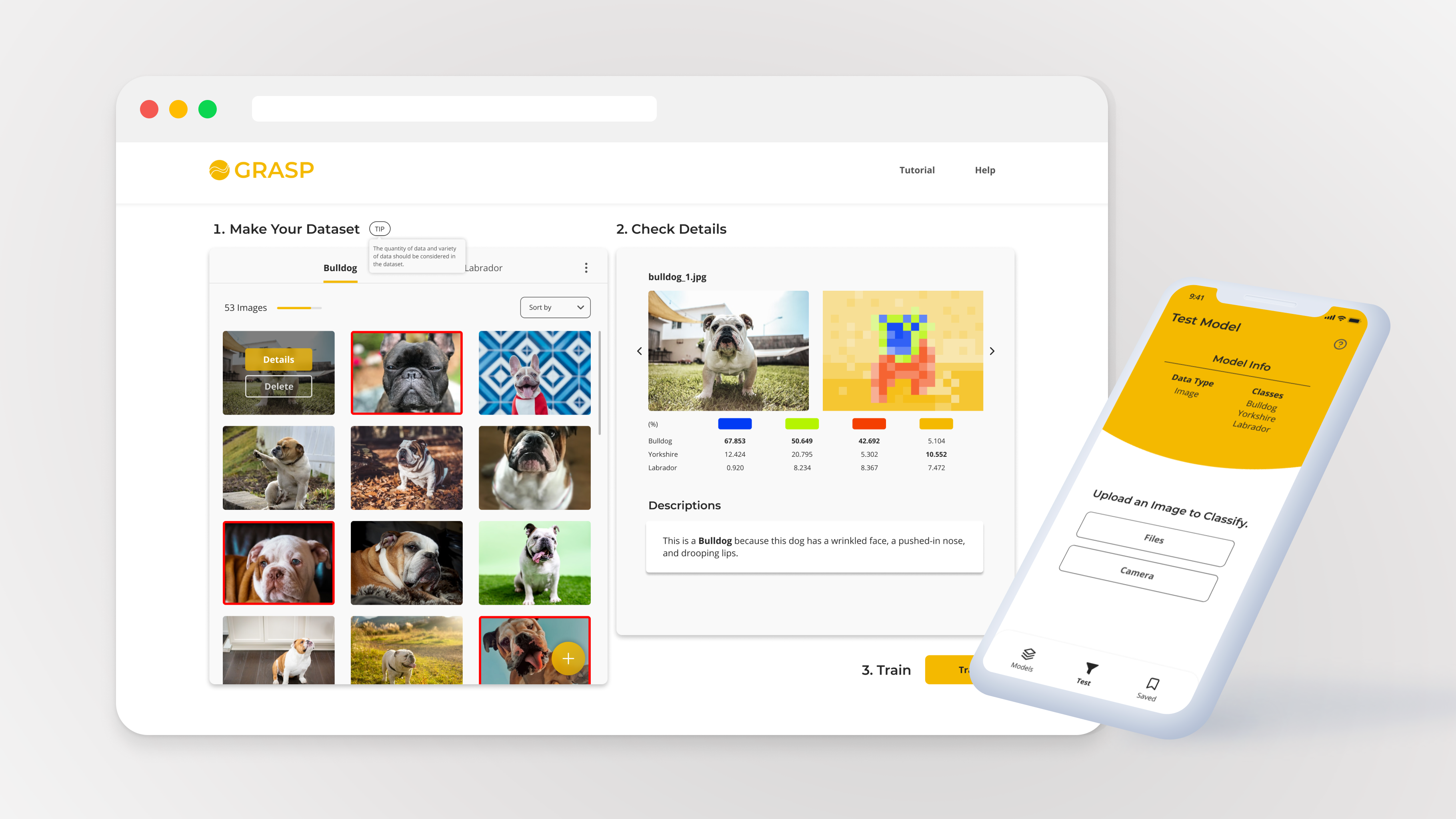

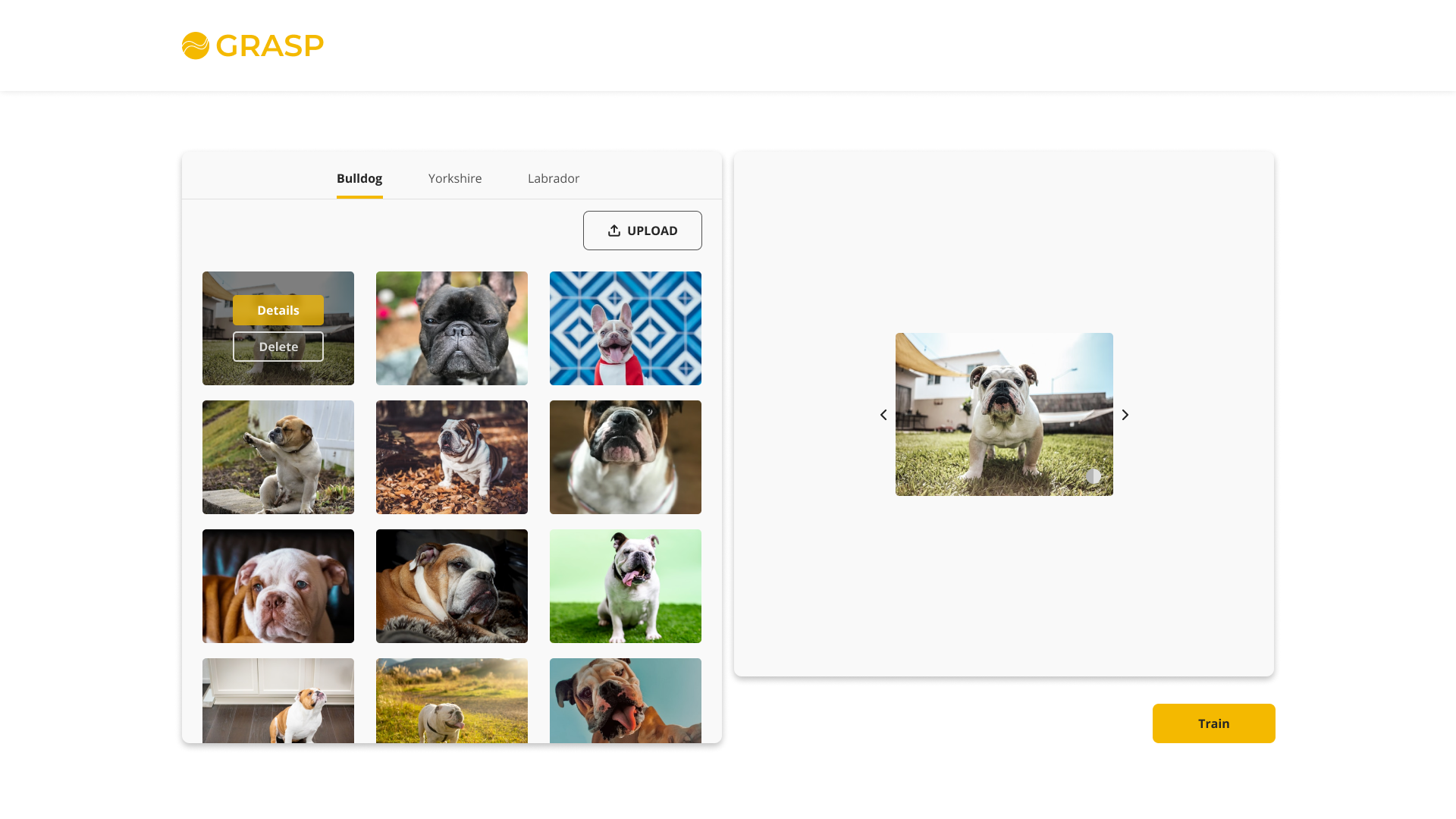

Grasp is a web-based tool we created to investigate opportunities in making machine learning technology more accessible to people. It is designed with features that help people make quick decisions and understand the underlying process of machine learning in more understandable ways. To observe if the tool is effective in helping people create machine learning models, I conducted user experiments with 32 participants. Based on the findings from the user experiment, I developed the tool design to include end-to-end experience and improve usability.Target Users

People with little or no knowledge in machine learning

Goals

Better assist them with building a machine learning model

Method

Conduct user experiments & Design a machine learning model building tool

Outcome

Implications for designing interfaces for building ML models

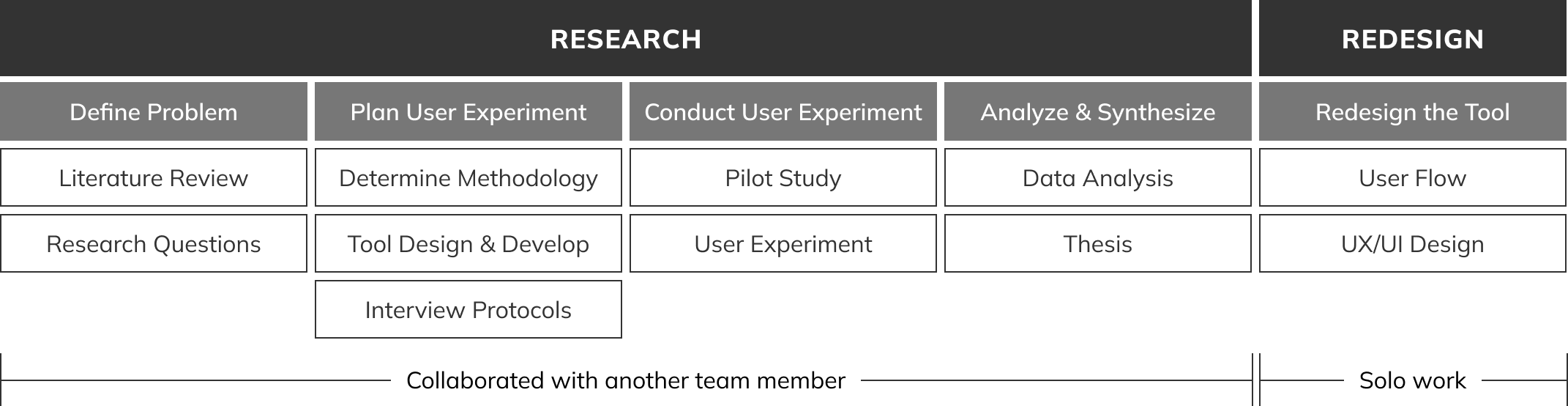

Process: Research → Redesign

This project consists of two parts: Research and Redesign of the tool.

The research focused on finding barriers and assessing features in an ML mode-building tool for non-experts.

With findings from the research, I redesigned the tool to suggest a paradigm for ML model-building in the real world.

Part I - Research

Part II - Redesign

Literature Review: What is the problem?

Studies have shown that it is not straightforward for non-experts to build ML models without prior knowledge and that they cannot build ML models in an efficient way.

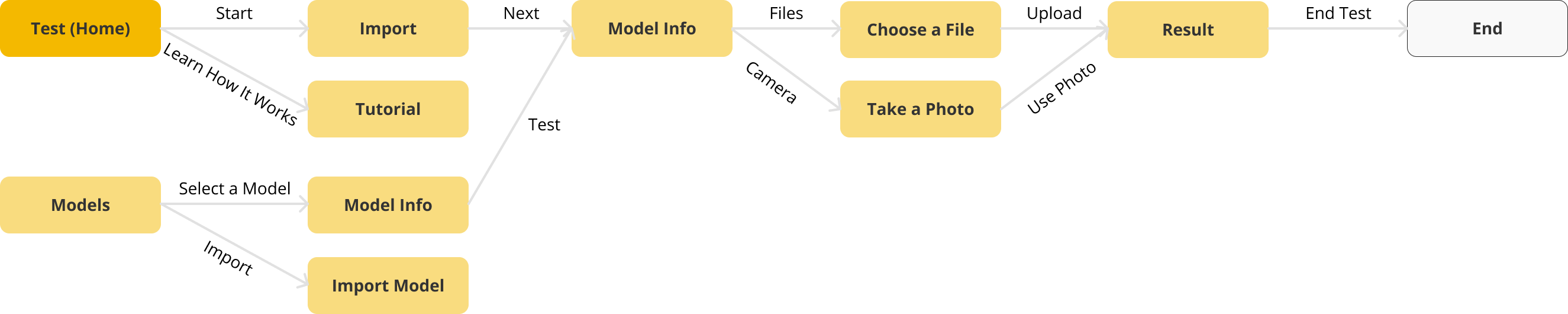

Research Question

"How might we make the process of creating a machine learning model easier and more understandable for people without prior knowledge?"

Non-experts: People with little or no prior knowledge in machine learning

Personas

To narrow down the problem, I defined personas as those who would benefit from being more accessible to machine learning technology.

- Developers: Can develop their own products and need machine learning models, but don't know how to build them.

- People who want to learn machine learning: Can learn more about machine learning by getting hands-on experience.

Our Approach: Adding features in the ML building process

To better assist novice users with building a deep learning model, we decided to use the concept of Interactive Machine Learning and Explainable Machine Learning from previous research.

Our definition for each concept:

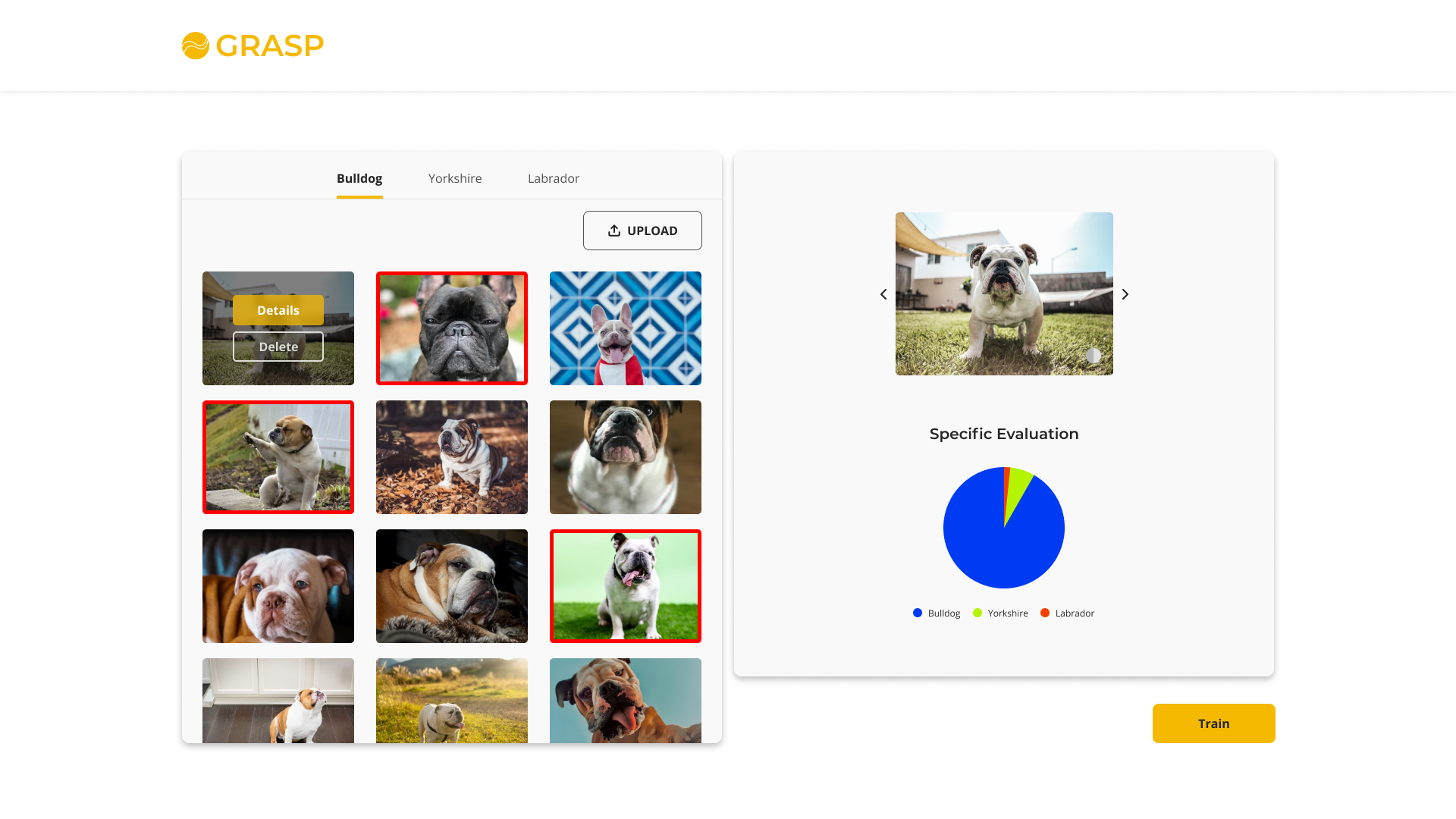

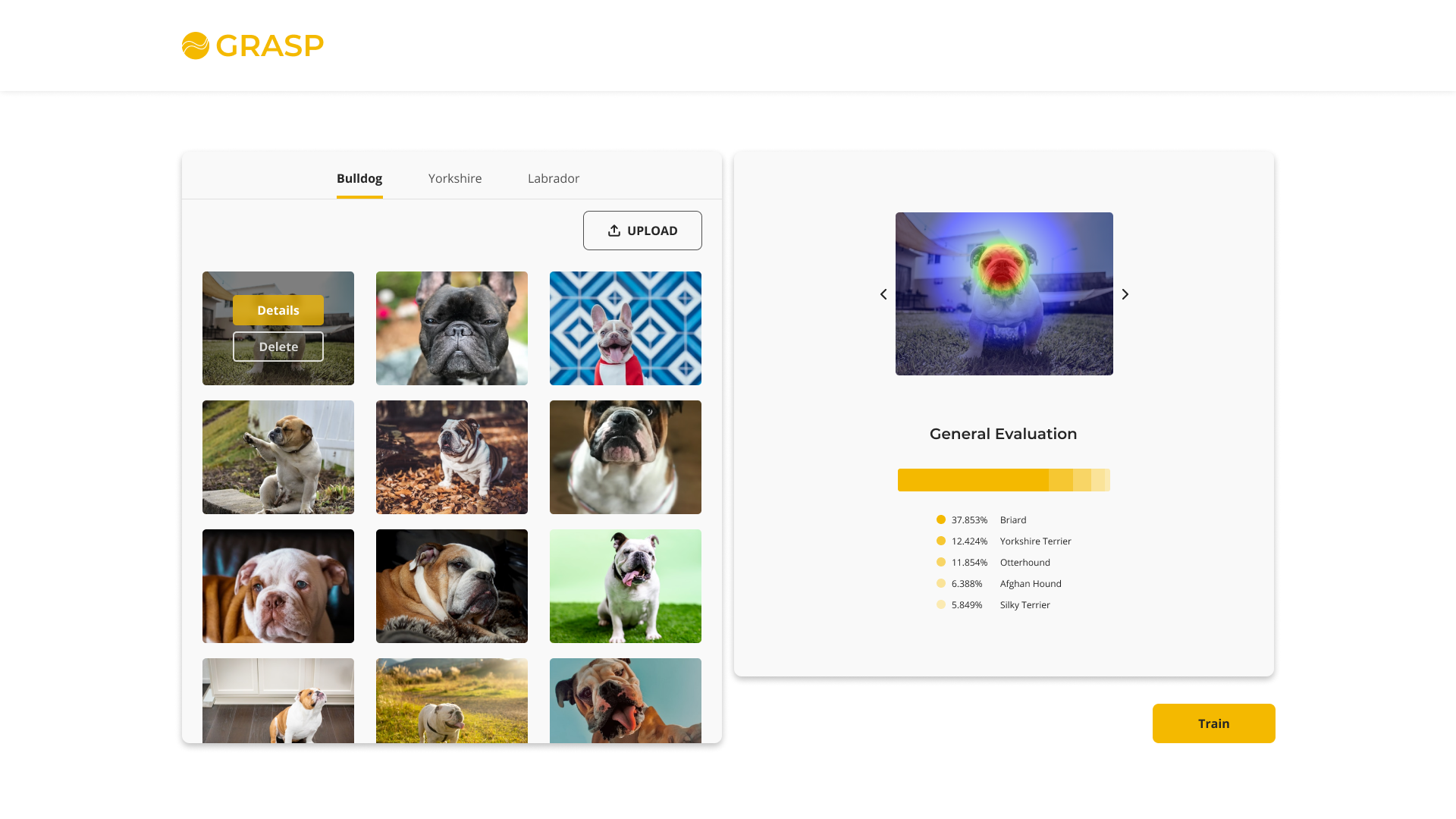

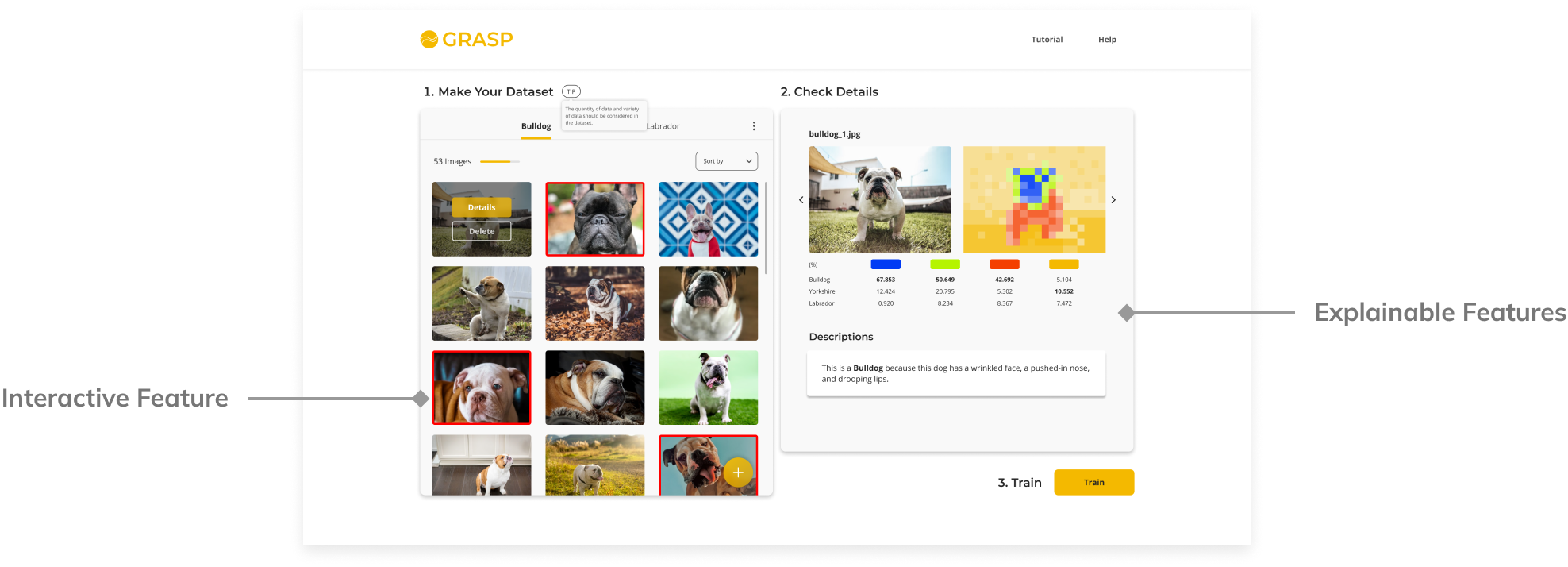

🏷️ Interactivity

Allowing users to quickly examine the impact of their actions and adapt subsequent inputs to obtain desired behavior when training ML models.

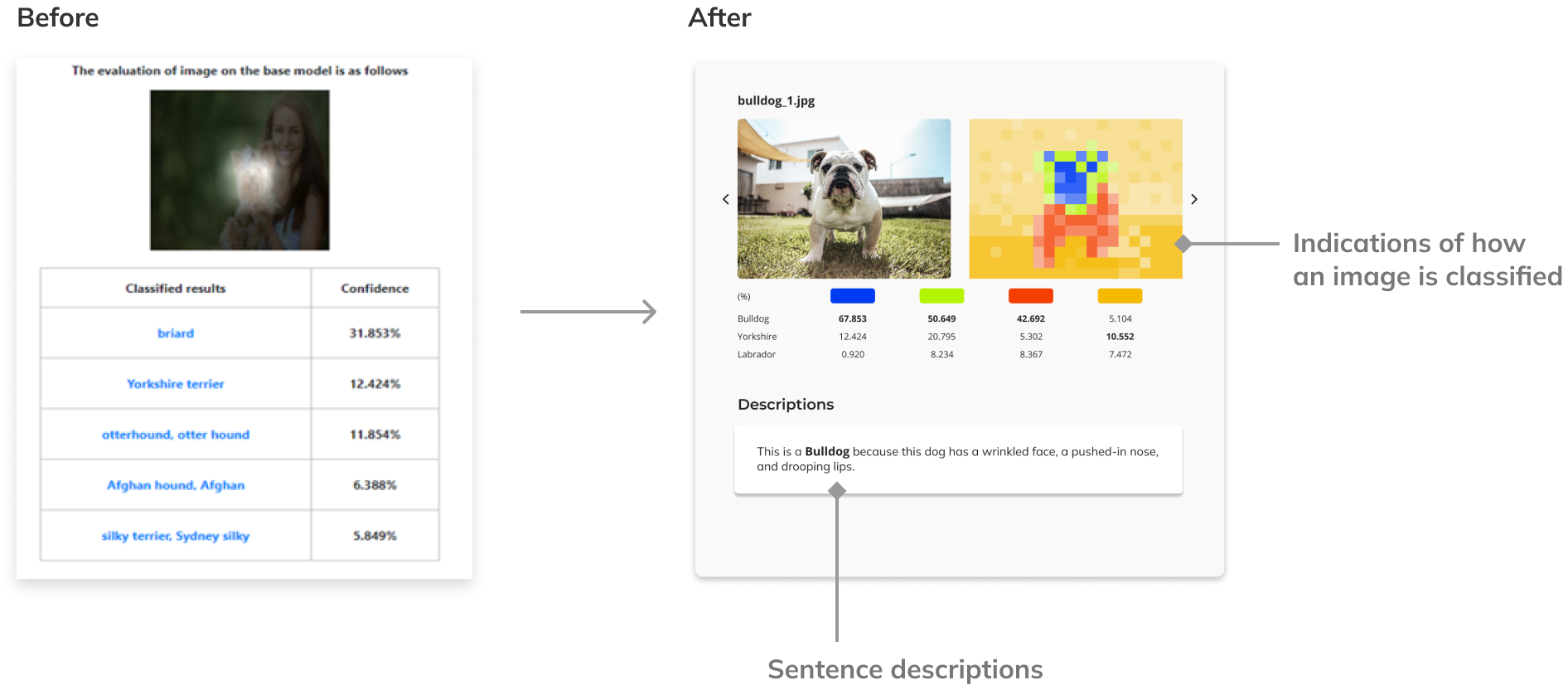

🏷️ Explainability

Uncovering the process of training or outcomes of an ML system with explanations presented in forms that are easy to understand for humans.

Hypothesis

Having 'interactive ML' and 'explainable ML' features would help non-experts build better ML models and understand the ML model-building prcess.

Creating a Tool to Validate Hypothesis

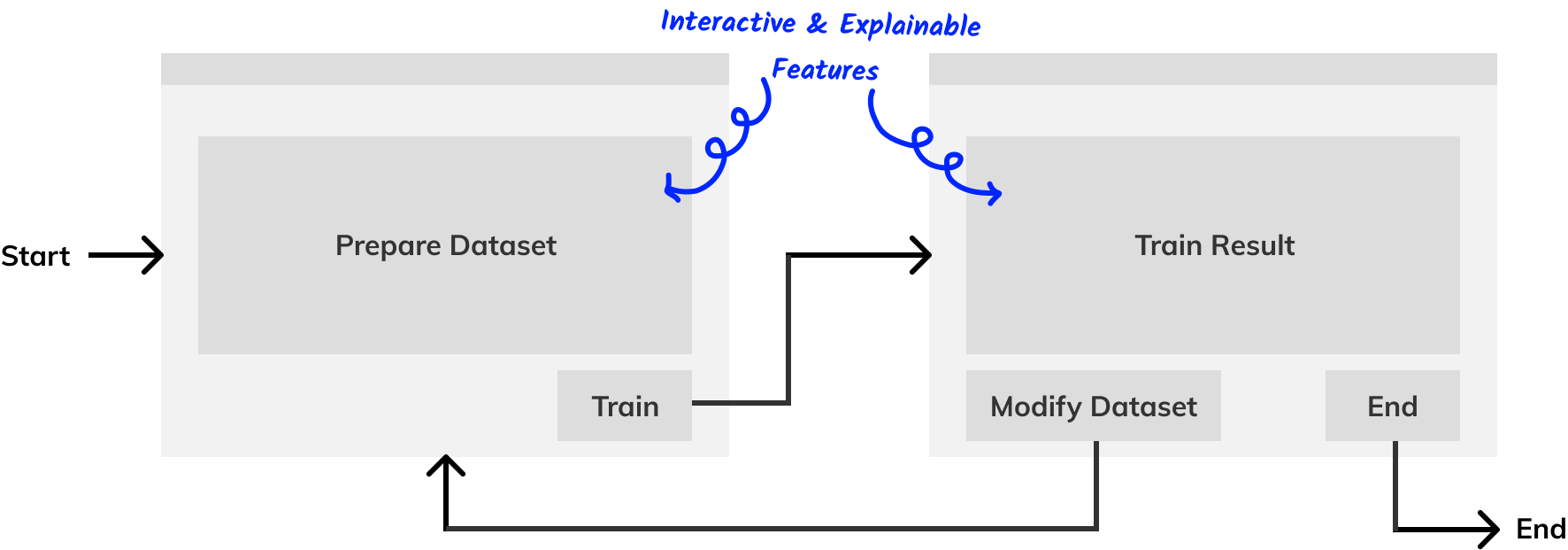

To observe how non-experts create ML systems and test the effectiveness of our approach, we decided to create an environment for building an ML model.

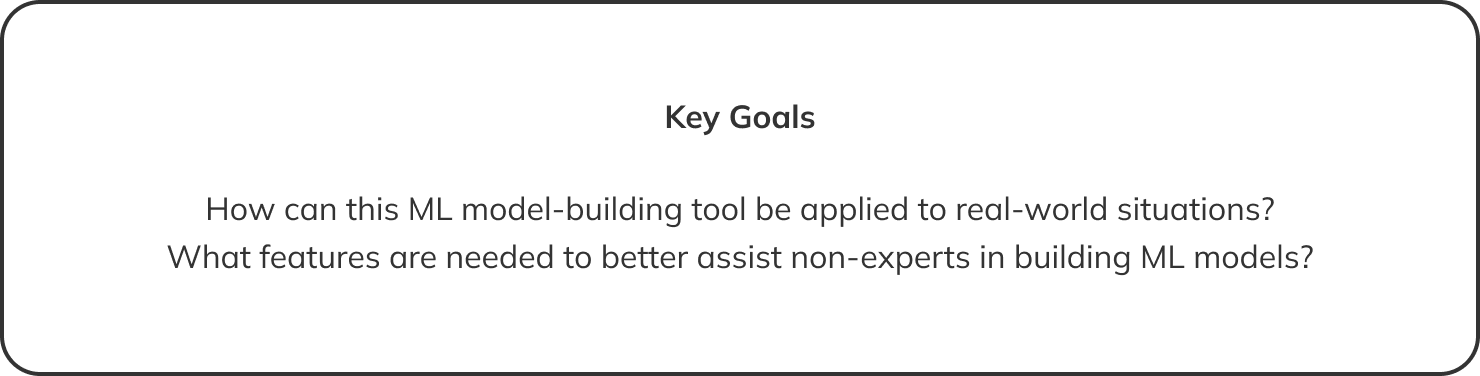

Key Challenge: (1) Help novices build machine learning models easily and (2) include interactive and explainable features.

Approach: Let users focus on easier processes: "preparing dataset" and "evaluating models".

Conducting User Experiment to Test the Tool

To investigate the effectiveness of interactivity and explainability and to identify potential barriers that non-experts would experience while building their ML systems, we conducted a study with our custom user interface designed for this study.

Participants

31 participants who had little or no ML-related experience and 3 experts for comparison.

Data Condition

Image dataset with 3 classes related to dogs (Bulldog, Yorkshire, Labrador).

Task

Build a machine learning model with higher accuracy than that of the initial model as high as possible.

Experimental Conditions

The user study was conducted under the following four conditions to examine if different feedback of a model-building tool have different effects on the model accuracy as well as users’ understanding of ML. Participants were assigned to one of the four conditions for the data preparation task.

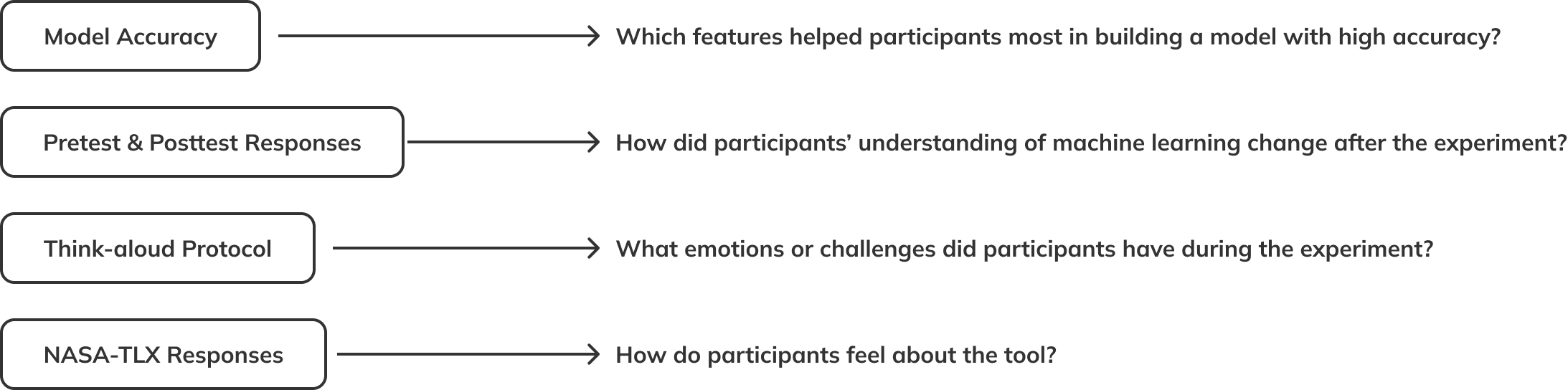

Interpretating Data from the Experiment

With the non-parametric data collected from participants, I analyzed them qualitatively and quantitatively using Python and Excel/Google Spreadsheet.

Key Findings from the Experiment

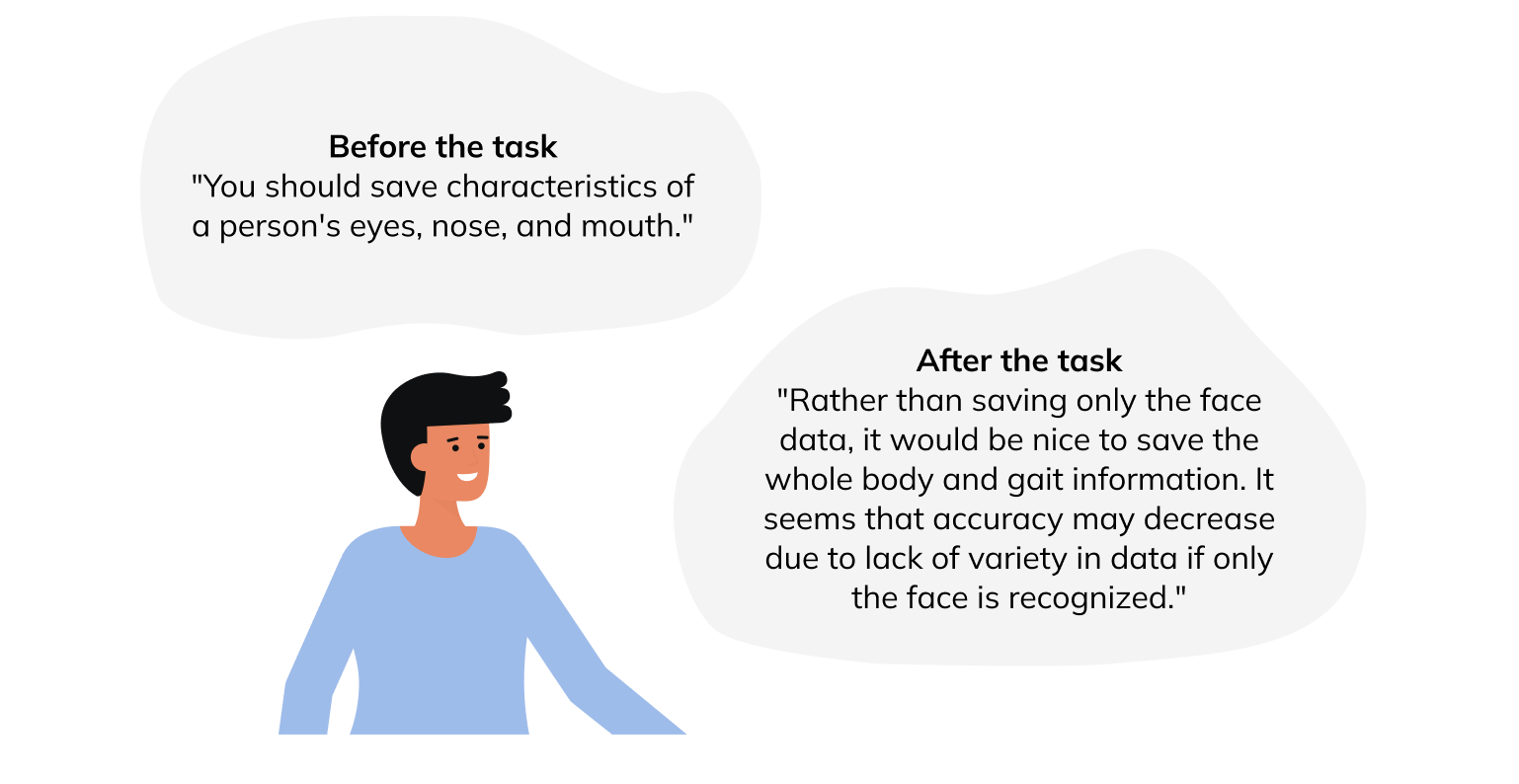

#1. Increased understanding of building a better ML model

- All participants' understanding of constructing an ML model has been improved after performing the task.

- Understanding has increased regardless of the data type, in both image and speech.

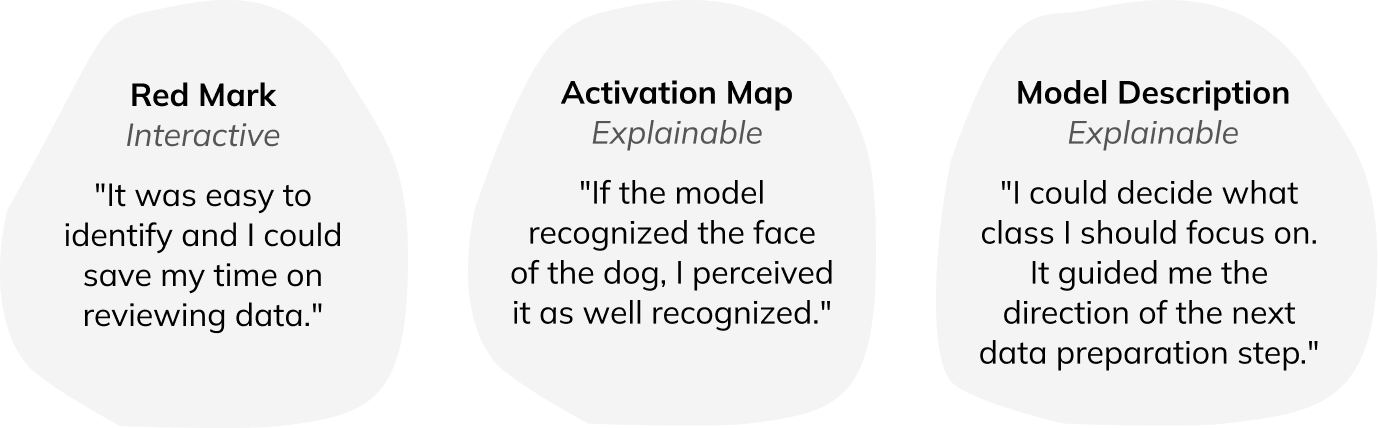

#2. Benefits for interactive and explainable feedback

- Interactive feedback has the advantage of providing immediate feedback to users.

- Explainable feedback deepened users’ understanding of building a better ML system.

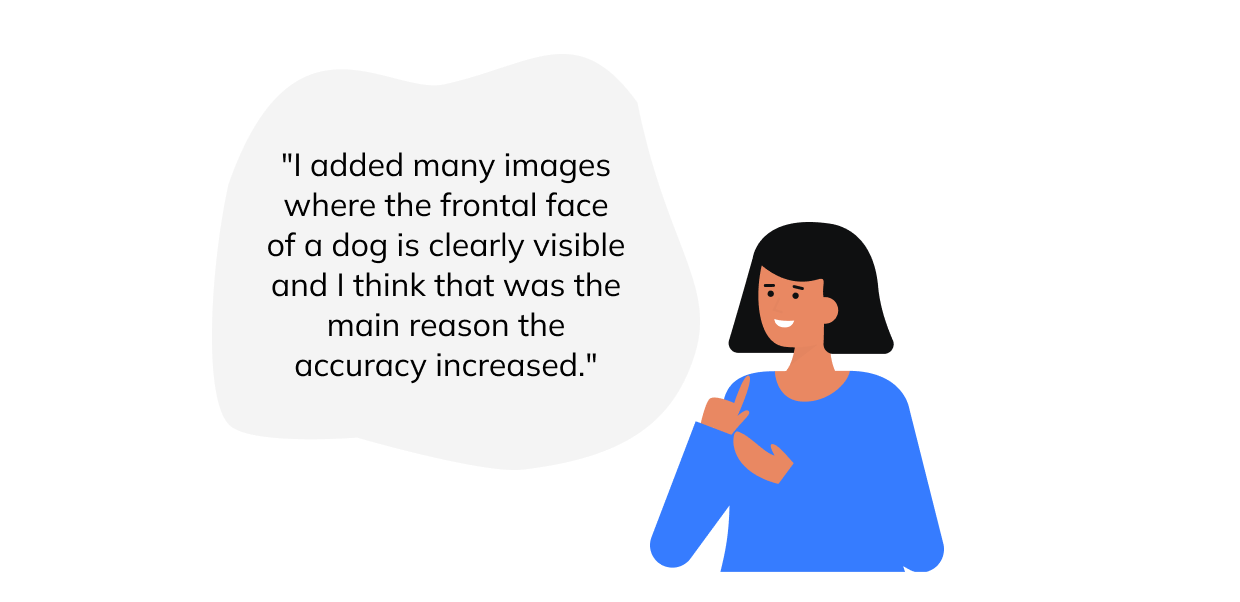

#3. Misconceptions formed due to absence of guidelines

Before or during the process, we have observed participants’ misconceptions related to ML, believing that their certain behavior was crucial in increasing accuracy while it might not be the main reason.

For more details on the findings, please check out the full paper.

Part I - Research

Part II - Redesign

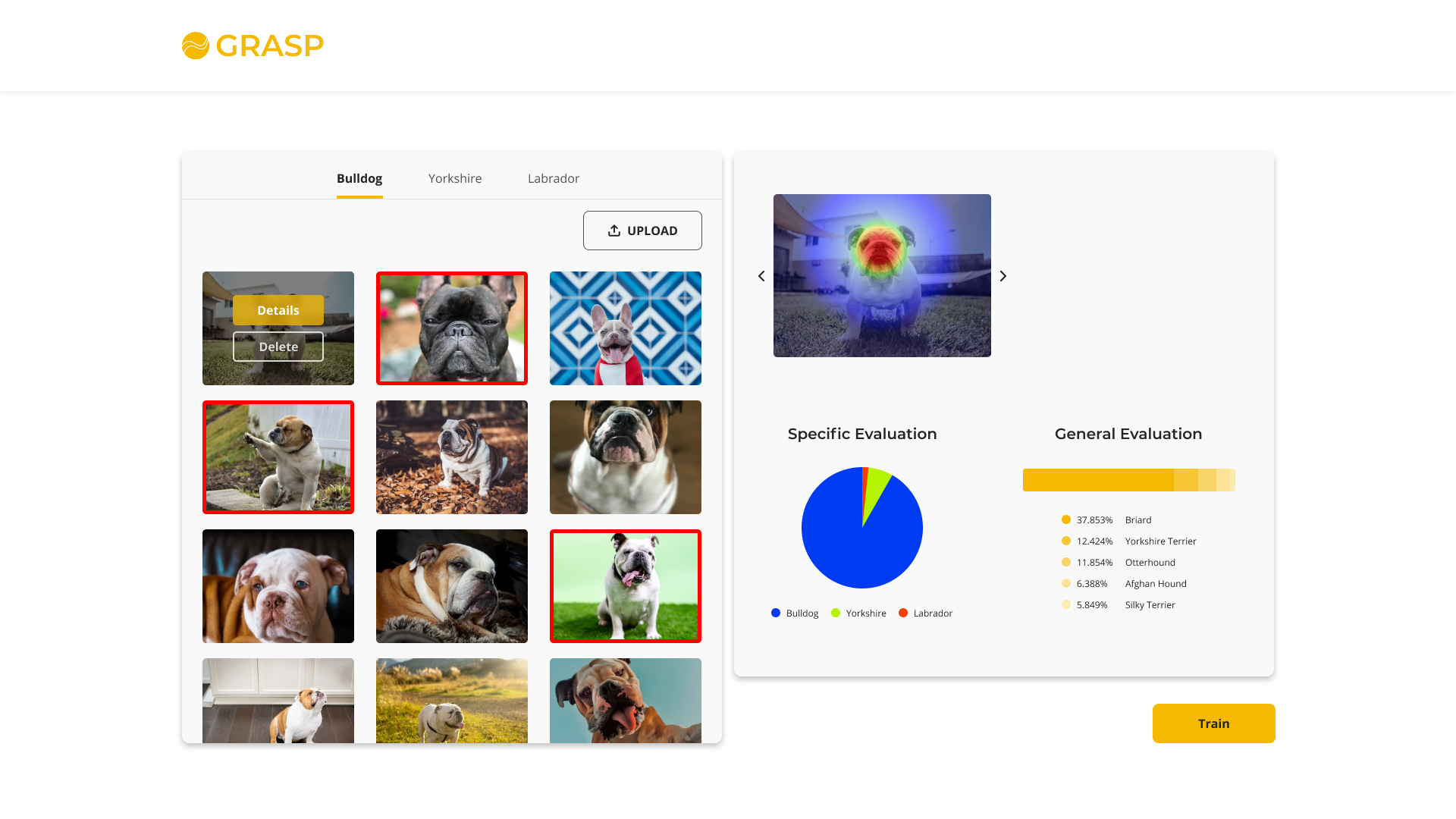

Redesigning the Tool

Now that I have discovered how to better help non-experts build machine learning models and found potential barriers, I redesigned the tool to be more accessible and usable to non-experts.

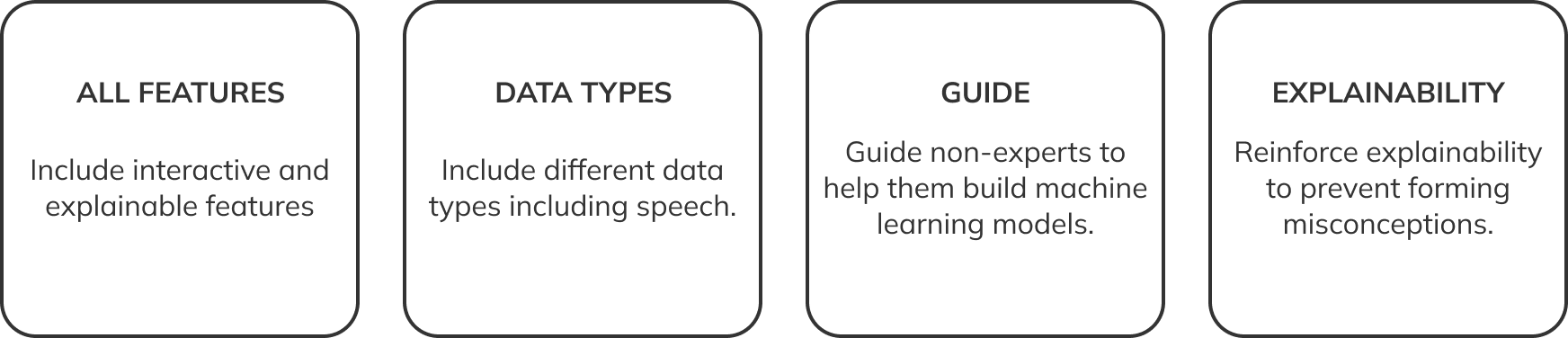

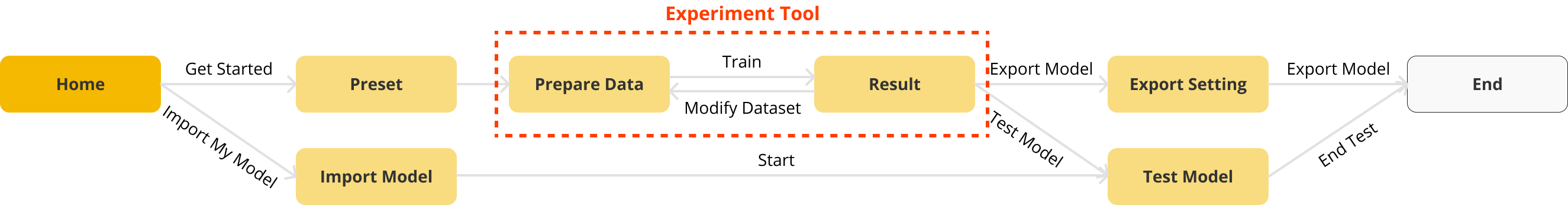

Also, I designed the tool to provide the whole experience of making a model, testing it, and exporting it to web or mobile environment.

APPROACHES

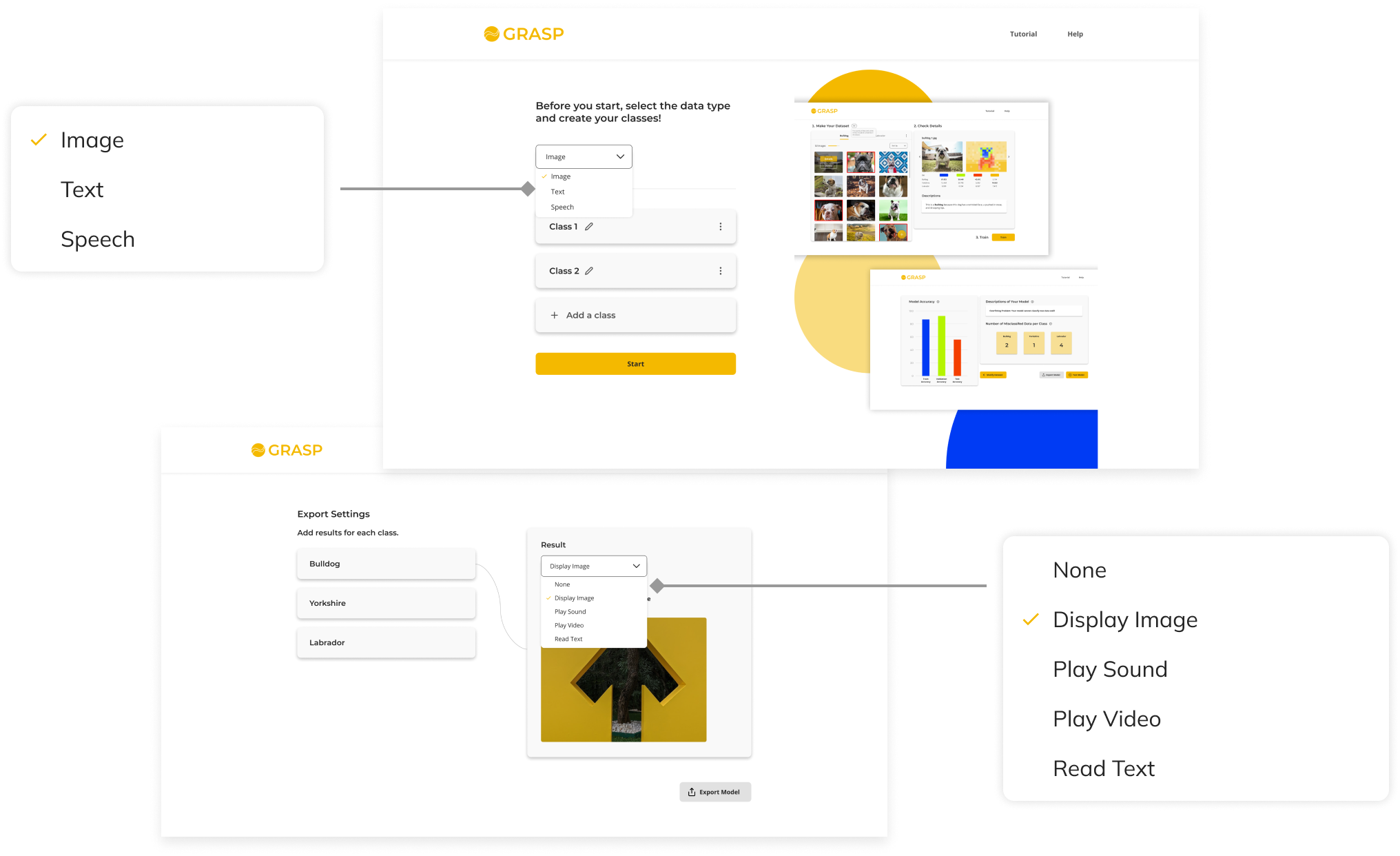

Adding End-to-end Experiences

To support the onboarding process and exporting and testing after building machine learning models, I included more screens on web application. Also, considering use cases when testing models, I assumed that having a mobile application would make the tool more usable and accessible for users.

Grasp on Desktop

Grasp on Mobile

Developing the Tool into High Fidelity Layouts

1. ALL FEATURES

Since all features had the effect of helping people build better machine learning models, I maintained the features that were used in the experiment. But I developed them to have better explainable features, referring to state of art technologies.

2. DATA TYPES

To help users build machine learning models with different data types, I included image, text, and speech options when building machine learning models. When exporting models they built, users can select what data and what file they want to appear in the result, which includes image, text, speech, and video.

3. GUIDE

For the screens where users build machine learning models, I included tooltips and text descriptions on each page that would be helpful to users and guide them with appropriate information.

Testing with Users

After designing layouts, I recruited two people with no prior knowledge in machine learning and tested the prototype. The goal of it was to test the usability and check if users can complete tasks of building a machine learning model and exporting it.

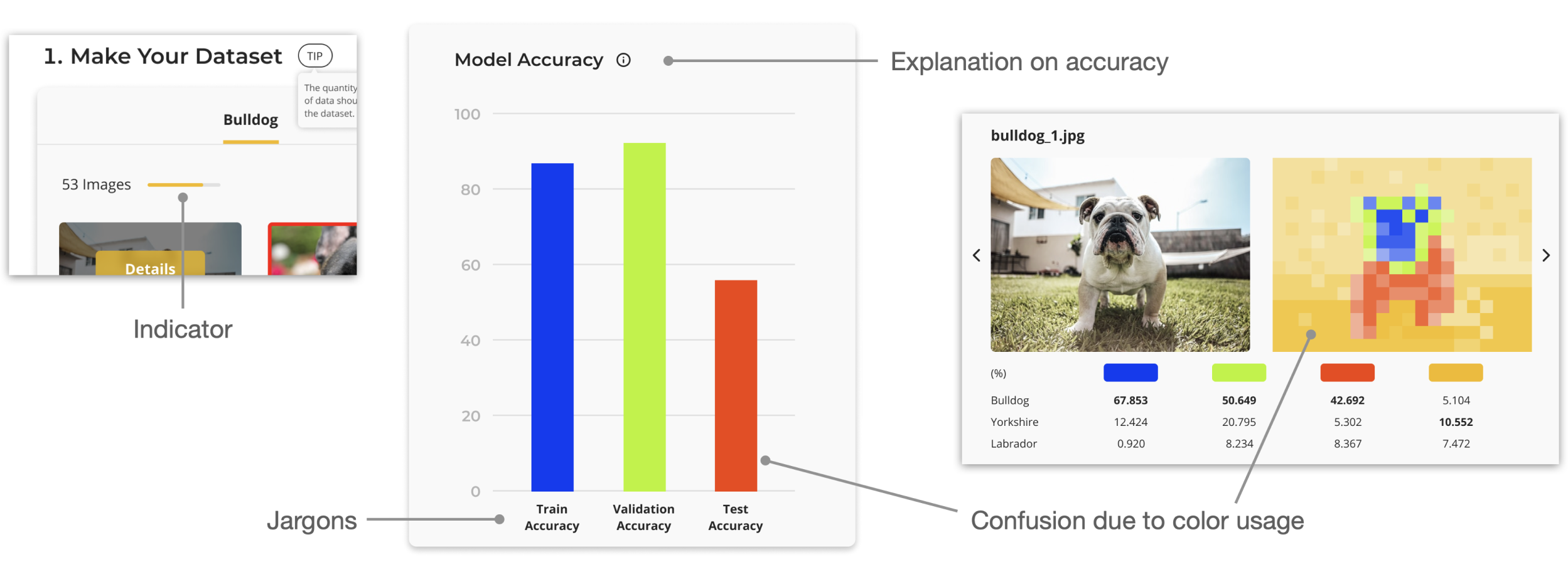

Defined Issues

- Use of Colors: I reused same colors in the system, and this made the participant think that they are related and indicate same data.

- Remaining Jargons: There were still jargons that didn’t have enough explanations and made the system not easy to use.

- Unclear Visual Element: The indicator was to let users know that they need more images to train the model. However, I found that participants perceived as the max number of images they can add.

Reflections

Designing and Deciding with Concrete EvidenceWorking on a research project required me to be precise in every decision I made. While planning how I validate my research questions through research, I reviewed and verified previous experiments and tools to apply research methods and design approaches. All decisions were made based on evidence from other valid research or solutions. Also, when writing implications in the paper, it was crucial to deliver discussion points based on our findings from the experiment, not being subjective in interpreting them. This practice equipped me with the skill to logically and objectively explain my design decisions with proper evidence.

Next Steps: Finding Use Cases for Real-World ApplicationsFrom research, I found how to better assist people in building machine learning models. By redesigning the tool with an end-to-end experience, I thought about how to make it accessible and usable for people with no prior knowledge. However, the real usage seems to be still limited to educational purposes or helping developers with no expertise in machine learning. I'm planning to conduct user interviews and research for use cases that this tool can be utilized by non-experts.